Why Using Motion in Audio & Music Matters | Sound Particles Talks #1

On Thursday, October 13th, the first Sound Particles Talks took place, a new Livestream show to discuss topics of the industry, demonstrate products in a practical way and connect with the audio community.

In a casual and funny way, once a month, Tony Joy, a specialist in the audio industry and a Sound Particles team member, will be live streaming on YouTube to talk about relevant topics with the audience. “Sound Particles Talks” is a place for all Sound Particles audience and clients to share their opinions, thoughts, questions, and feedback but specially to connect with the audio industry by discussing relevant topics for the community.

The first talk was focused on “Why Using Motion in Audio & Music Matters” and the discussion covered how using motion in post-production and music creation can really impact and heighten the experience of the listener.

The Sound Particles team had an amazing time with its extremely engaging audience that was actively asking questions and leaving comments. We had an amazing coffee chat and we hope you did too :)

If you couldn't join, here's a wrap-up!

From Portugal and LA, our two dear team members gathered together online to discuss the art of movement and why using motion in music and audio matters.

So why do we use pan to move things?

Let's start with a small story!

In 1999, two psychologists organized an experiment, at Harvard, in which every participant was shown a video of six people, three in white shirts and three in black shirts, passing basketballs around. As a participant, they should keep counting the number of passes made by the people in white shirts.

See the video below:

During this video, a gorilla strolls into the middle of the video, faces the camera, thumps its chest and then leaves, spending nine seconds on screen. Did you see the gorilla?

50% of the people who participated did not see the him...Why?

Well, studies call this "Inattentional Blindness/Selective Attention" and it probably has happened to you before, both visually or with sound.

This leads us to the cocktail party effect - our ability to separate one conversation from another. From one side, you have the capability to tune your ears to two people 10ft away with a group of people in the middle and still focus on that couple, ignoring the group right in front of you.

All the sounds coming from these people are equally loud, and although we are fantastically good at tuning into one conversation over many others, we seem to absorb very little information from conversations we reject.

For example, this can happen while listening to your favorite song. This probably happens to you all the time: the more you listen to a song, the more new sounds you discover, that you haven't noticed before!

And why is our brain doing this?

Because it has limited resources and needs to be energy efficient! To maintain these, our brain does two things:

Creates habits and ignores known sounds! For example, our heart is beating all the time but usually, our brain chooses to ignore it. This happens also with the tick-tock of a clock, the sound is there all the time but you don't listen to it because your brain identifies it as a known sound and ignores it. This happens for every predictable situation.

To understand this better, let's go back to human evolution. When our ancestors lived in forests, their brains had to make sure the environment was safe, especially in a forest, every sound out of place would immediately trigger them.

Our brain always needs something new. If a sound is steady and predictable, our neurons will go back to sleep because those sounds are known, to save energy. But the moment there's a small change (pitch, loudness, duration, spatial movement) those neurons will wake up again.

This leads you to ask:

How can we use this when we do sound design for music?

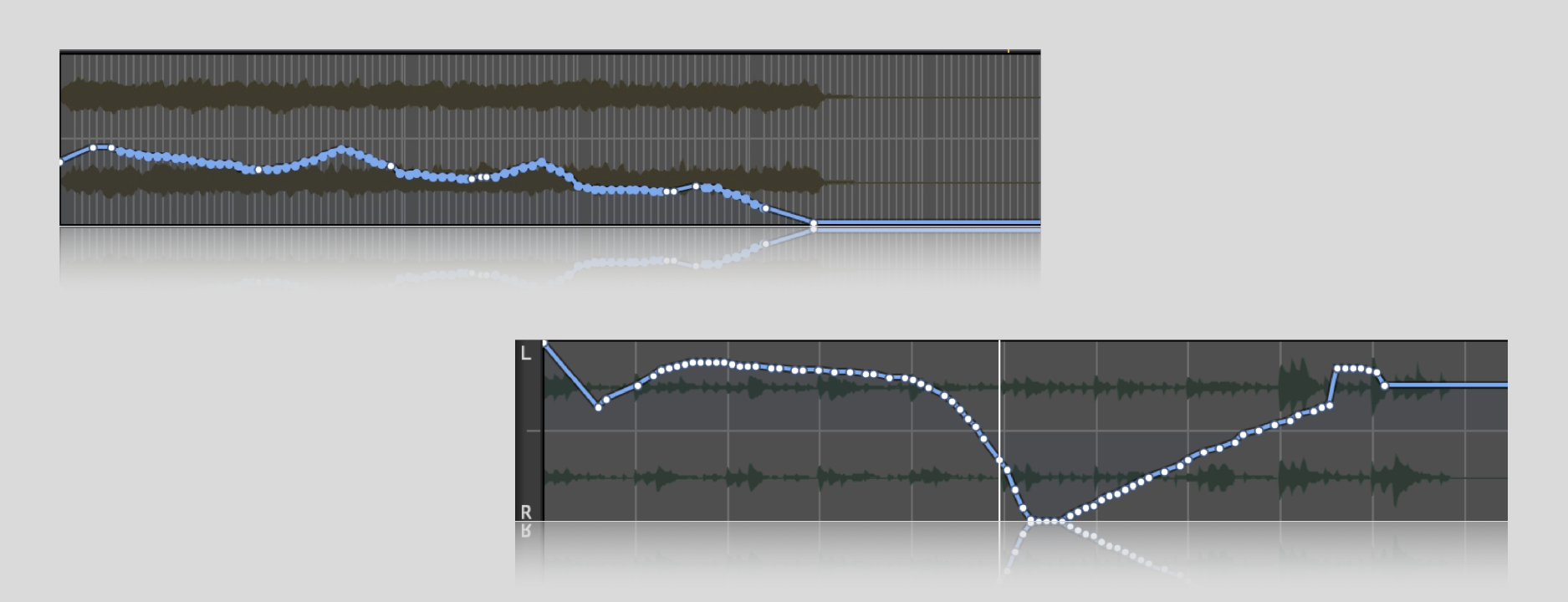

Remember the cocktail party effect? The downside of this effect is that anything that's rejected ends up being zero information we get. All of us have been doing this in music and sound design, we've always automated volume and pan. Looking at the example below, in which Tony was automating an instrument to get the brain's attention, it suddenly got loud, Tony pulled it back, got used to it and his brain identified the sound as 'safe'.

This is the psychology behind why you've been automating volume, pan and everything all this time.

After all the amazing explanations, Tony took some time to put all you just learned into action by showing Sound Particles products, which are all about motion!

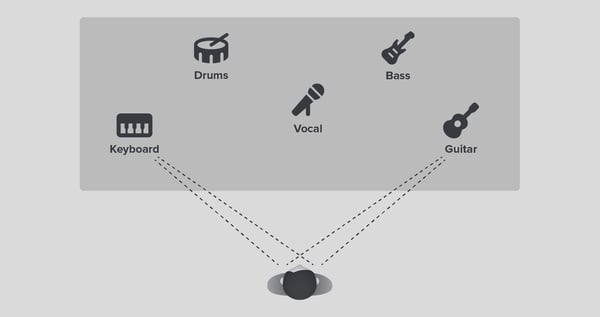

With Sound Particles software you have the ability to add discrete movement to each particle and the flexibility to make them move either in a straight line or in rotation. You can also add velocity and acceleration.

It's about being creative and knowing what needs to move! Explore the tools and all the opportunities motion has for you!

We would like to thank everyone who attended and joined the discussion with amazing and insightful comments, we hope you had a great time just like we did 🥰

If you couldn't attend, the Livestream is available below:

We’re preparing the next talk and your feedback is welcome. What topic would you like to see discussed next? 👇

If you want to know when our next Sound Particles Talks is, subscribe to our Newsletter!

Topics: Sound Particles, Audio Software, Sound Design, Film, Sound for Film and TV, Audio tech, 3D audio, Surround Sound, Gaming Audio

.png?width=839&name=blog-newsletter-banner%20(2).png)